Fine-Tuning LLMs: Your Guide to PEFT, QDoRA, and Other Nifty Tricks

GenAI Series Part 5: Mastering Parameter-Efficient Fine-Tuning for Production Systems

In my previous GenAI posts, we explored LLM architecture evolution, prompt engineering fundamentals, building RAG Applications and Agentic AI. Now, let's tackle the next crucial piece of the puzzle: making these powerful models truly yours.

So, you’ve got a big, powerful Large Language Model (LLM). It’s smart, but it’s not your kind of smart. You want it to write like you, code like your team, or understand the jargon of your industry.

The old way to do this was full fine-tuning. Imagine you have a master chef (your base LLM). To teach them a new recipe, full fine-tuning is like making them go through culinary school all over again. It takes months, costs a fortune, and you need a kitchen the size of a stadium (read: a multi-GPU server).

It’s slow, expensive, and frankly, overkill.

But what if you could just pass the chef a recipe card? What if you could make a few, tiny, expert-level adjustments to get the exact flavour you want?

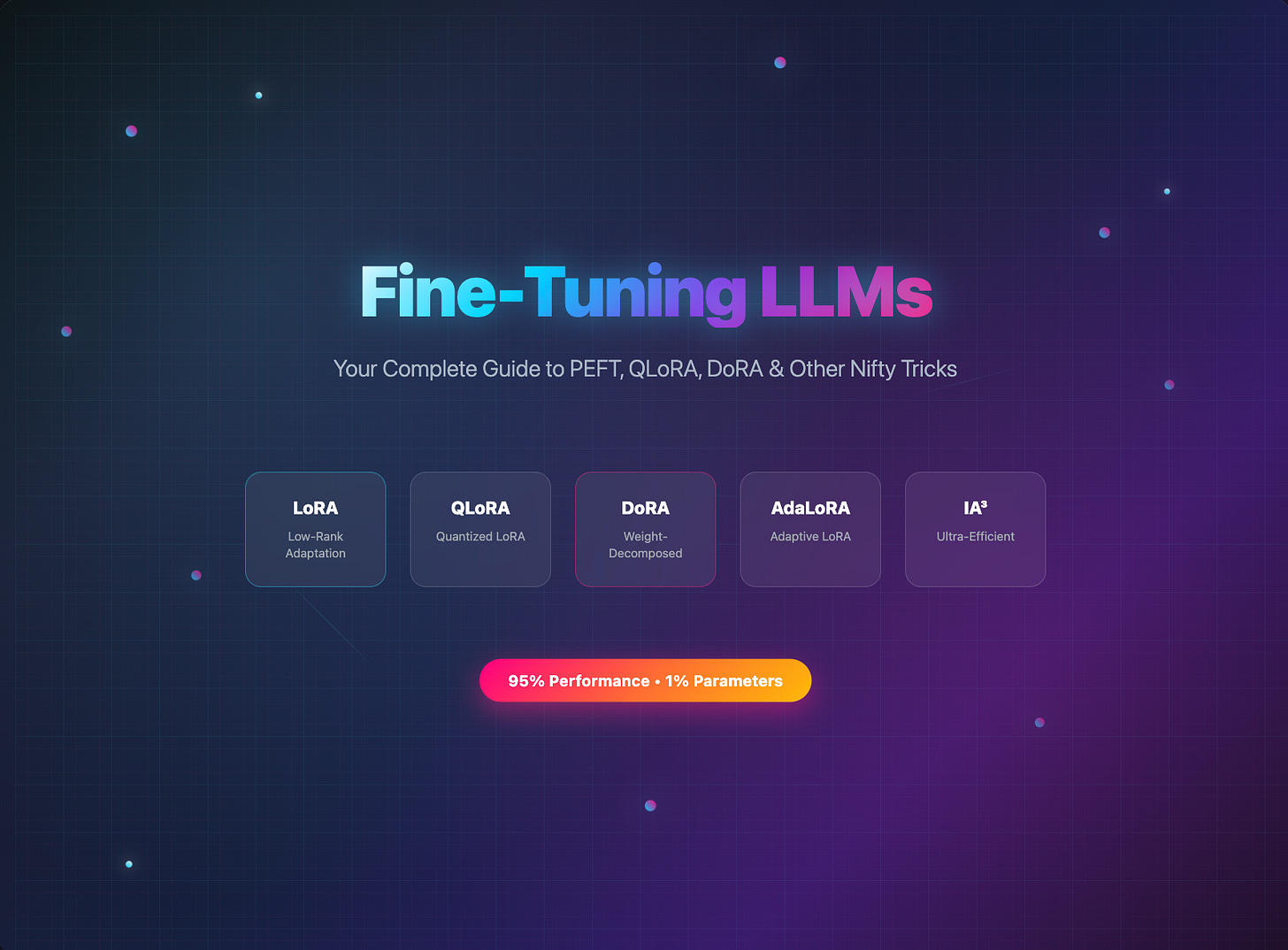

That's Parameter-Efficient Fine-Tuning (PEFT). PEFT lets you achieve 95% of the performance of a full fine-tune while training less than 1% of the parameters.

What We'll Cover in This Guide:

By the end of this journey, you'll be a PEFT pro. We'll cover the complete lifecycle:

Data Prep: How to create a dataset for fine-tuning.

The Full Workflow with LoRA: We'll use the most popular PEFT method, LoRA, as our main example to walk through training, saving, evaluation (with code!), and inference.

A Deep Dive into Advanced PEFT: We will alsoGo beyond LoRA to understand the mechanics of QLoRA, AdaLoRA, DoRA, and IA³.

Production & Deployment: We will learn how to merge adapters for zero-latency inference.

The Ultimate Cheat Sheet: A clear framework to help you choose the right method for your job.

Ready? Let's get our hands dirty.

Are you ready to level up your LLM skills? Check out the Generative AI Engineering with LLMs Specialization on Coursera! This comprehensive program takes you from LLM basics to advanced production engineering, covering RAG, fine-tuning, and building complete AI systems. If you want to go from dabbling to deployment? This is your next step!